Sitemap

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Pages

Posts

Future Blog Post

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Blog Post number 4

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 3

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 2

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 1

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

portfolio

Payload System for Indoor Mapping for Disaster Rescue

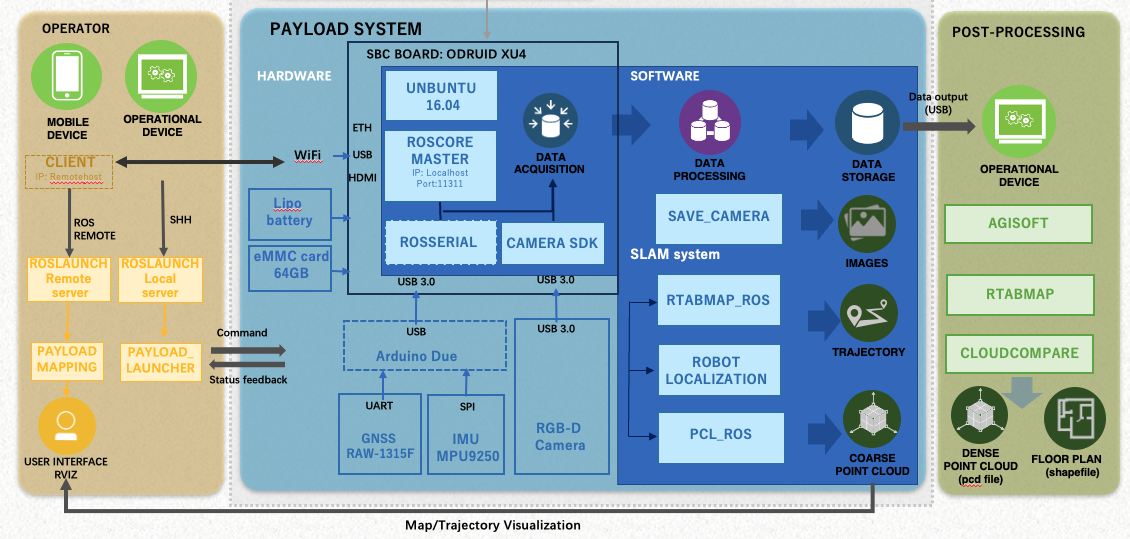

This project developed a payload system for indoor mapping specifically designed for disaster rescue purposes. The system consists of a depth camera, IMU (Inertial Measurement Unit), and a rover platform. The software implementation is based on RTMBSLAM (Real-Time Multi-Baseline SLAM) with Extended Kalman Filter (EKF) for sensor fusion, enabling robust and accurate indoor mapping in challenging environments. The payload system enables autonomous navigation and mapping in disaster scenarios where GPS signals are unavailable or unreliable. By fusing data from multiple sensors through EKF, the system provides reliable pose estimation and real-time mapping capabilities essential for rescue operations.

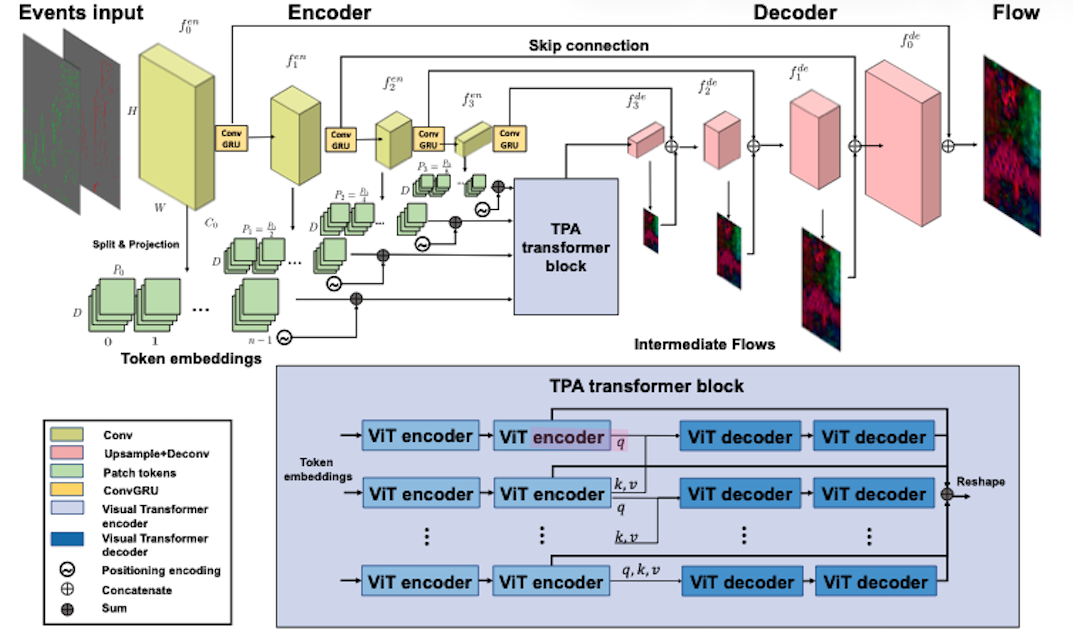

Event transformer FlowNet for optical flow estimation

In this project, I developed ET-FlowNet, a hybrid RNN-ViT architecture for optical flow estimation using event cameras. This work represents the first time that transformers have been incorporated into event-based optical flow tasks. Event cameras are bioinspired sensors that produce asynchronous and sparse streams of events, offering advantages such as fast motion detection with low latency, high dynamic range, and low power consumption. I designed this architecture to address the need for fast and robust computation of optical flow for robotics applications. Visual transformers (ViTs) are ideal candidates for learning global context in visual tasks, and rigid body motion is a prime case for the use of ViTs since long-range dependencies in the image hold during rigid body motion. I performed end-to-end training using a self-supervised learning method. My results show comparable and in some cases exceeding performance with state-of-the-art coarse-to-fine event-based optical flow estimation.

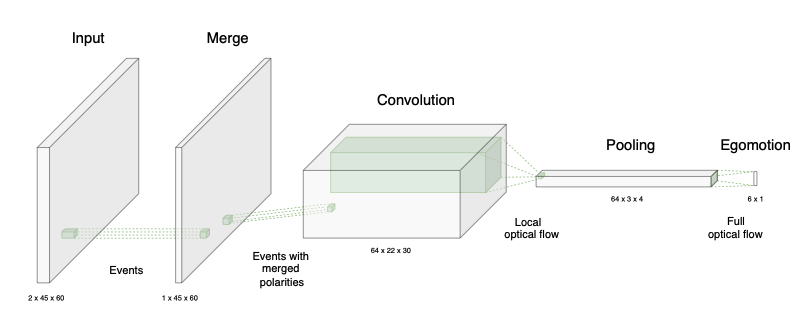

Egomotion from event-based SNN optical flow

I developed a method for computing egomotion using event cameras with a pre-trained optical flow spiking neural network (SNN). To address the aperture problem encountered in the sparse and noisy normal flow of the initial SNN layers, I designed and implemented a sliding-window bin-based pooling layer that computes a fused full flow estimate. To add robustness to noisy flow estimates, instead of computing the egomotion from vector averages, I developed an optimization approach based on the intersection of constraints. I also integrated a RANSAC step to robustly deal with outlier flow estimates in the pooling layer. I validated the approach on both simulated and real scenes and achieved results that compare favorably to state-of-the-art methods. The method may be sensitive to datasets and motion speeds different from those used for training, which is a limitation I identified during the evaluation.

Spiking Neural Network Transformer for Event-based optical flow estimation

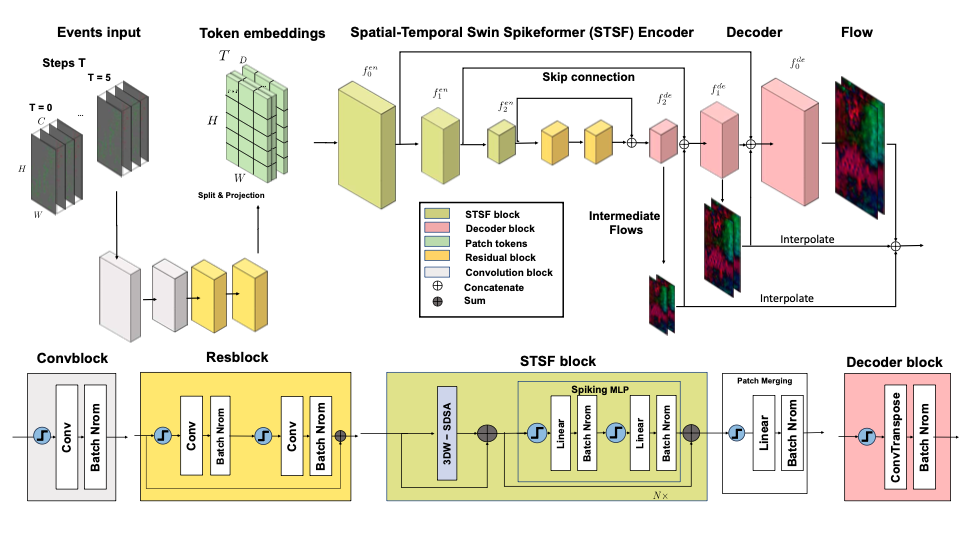

I developed SDformerFlow, the first fully spiking transformer-based architecture for optical flow estimation using event cameras. This work was part of my research on combining event cameras with spiking neural networks (SNNs) for efficient motion estimation. I designed and implemented two solutions: STTFlowNet, a U-shaped artificial neural network (ANN) architecture with spatiotemporal shifted window self-attention (swin) transformer encoders, and SDformerFlow, its fully spiking counterpart incorporating swin spikeformer encoders. I also developed two variants of the spiking version with different neuron models. This work represents the first use of spikeformers for dense optical flow estimation. I conducted end-to-end training for all models using supervised learning, achieving state-of-the-art performance among SNN-based event optical flow methods on both the DSEC and MVSEC datasets, with significant reduction in power consumption compared to equivalent ANNs.

FRAILWATCH: Sistema de Monitorización Robótico de la Fragilidad para Personas Mayores

FRAILWATCH is a research project funded by the 2023 Call for Research and Innovation Projects of Barcelona City Council and La Caixa Foundation (Project 23S06141-001). The project aims to promote the study of innovative social robotic capabilities with significant potential in the medical field. My contribution focused on developing a 3D monocular human action recognition benchmark for frailty assessment in elderly patients. I explored and evaluated multiple 3D reconstruction methods including direct methods, 2D-3D lifting approaches, and SMPL for human pose estimation. To validate the methods, I recorded a comprehensive test dataset using an Optitrack motion capture system, providing ground truth data for evaluation. I trained multiple models specifically designed for frail tests and integrated algorithms for assessing various frailty indicators including balance, gaiting speed, and other mobility metrics, which includes solving the scale ambiguity and recover the trajectory in the world space. The work also included comprehensive performance evaluation and comparison with stereo-based solutions, showing the effectiveness and limitation of using monocular methods.

Human action recognition recorder for assistive robot

The objective of the project is to help elderly or disabled people maintain a healthy mental and physical life by using intelligent robotic systems. My contribution focused on developing a human action recognition method based on 3D skeleton for the Temi assistive robot. I worked directly with the Temi robot platform and developed an Android application that integrates multiple capabilities. The app performs human pose estimation, recognizes basic human actions from the 3D skeleton data, and automatically records the time spent for each action. The system can operate in real-time for immediate feedback and monitoring, or save the data for post-processing analysis. This flexibility enables continuous monitoring and assessment of daily activities, providing valuable data for healthcare professionals and caregivers to track the physical activity patterns of elderly or disabled users.

publications

Event transformer FlowNet for optical flow estimation Permalink

Published in 2022 British Machine Vision Conference, 2022

Event cameras are bioinspired sensors that produce asynchronous and sparse streams of events at image locations where intensity change is detected. They can detect fast motion with low latency, high dynamic range, and low power consumption. Over the past decade, efforts have been conducted in developing solutions with event cameras for robotics applications. In this work, we address their use for fast and robust computation of optical flow. We present ET-FlowNet, a hybrid RNN-ViT architecture for optical flow estimation. Visual transformers (ViTs) are ideal candidates for the learning of global context in visual tasks, and we argue that rigid body motion is a prime case for the use of ViTs since long-range dependencies in the image hold during rigid body motion. We perform end-to-end training with self-supervised learning method. Our results show comparable and in some cases exceeding performance with state-of-the-art coarse-to-fine event-based optical flow estimation.

Recommended citation: Y. Tian and J. Andrade-Cetto. Event transformer FlowNet for optical flow estimation, 2022 British Machine Vision Conference, 2022, London. http://www.iri.upc.edu/files/scidoc/2645-Event-transformer-FlowNet-for-optical-flow-estimation.pdf

Egomotion from event-based SNN optical flow

Published in 2023 ACM International Conference on Neuromorphic Systems, 2023

We present a method for computing egomotion using event cameras with a pre-trained optical flow spiking neural network (SNN). To address the aperture problem encountered in the sparse and noisy normal flow of the initial SNN layers, our method includes a sliding-window bin-based pooling layer that computes a fused full flow estimate. To add robustness to noisy flow estimates, instead of computing the egomotion from vector averages, our method optimizes the intersection of constraints. The method also includes a RANSAC step to robustly deal with outlier flow estimates in the pooling layer. We validate our approach on both simulated and real scenes and compare our results favorably to the state-of-the-art methods. However, our method may be sensitive to datasets and motion speeds different from those used for training, limiting its generalizability.

Recommended citation: Y. Tian and J. Andrade-Cetto. Egomotion from event-based SNN optical flow, 2023 ACM International Conference on Neuromorphic Systems, 2023, Santa Fe, NM, USA, pp. 8:1-8. http://www.iri.upc.edu/files/scidoc/2747-Egomotion-from-event-based-SNN-optical-flow.pdf

SDformerFlow: Spiking Neural Network Transformer for Event-based Optical Flow

Published in 2024 International Conference on Pattern Recognition (ICPR), 2024

Event cameras generate asynchronous and sparse event streams capturing changes in light intensity. They offer significant advantages over conventional frame-based cameras, such as a higher dynamic range and an extremely faster data rate, making them particularly useful in scenarios involving fast motion or challenging lighting conditions. Spiking neural networks (SNNs) share similar asynchronous and sparse characteristics and are well-suited for processing data from event cameras. Inspired by the potential of transformers and spike-driven transformers (spikeformers) in other computer vision tasks, we propose SDformerFlow, a fully spiking transformer-based architecture for optical flow estimation. SDformerFlow incorporates swin spikeformer encoders and presents variants with different neuron models. Our work demonstrates the first use of spikeformers for dense optical flow estimation. We conduct end-to-end training using supervised learning. Our results yield state-of-the-art performance among SNN-based event optical flow methods and show significant reduction in power consumption compared to equivalent ANNs.

Recommended citation: Y. Tian and J. Andrade-Cetto. SDformerFlow: Spiking Neural Network Transformer for Event-based Optical Flow, 2024 International Conference on Pattern Recognition (ICPR), 2024. https://link.springer.com/chapter/10.1007/978-3-031-78354-8_30

Spatiotemporal swin spikeformer for event-based optical flow estimation

Published in arxiv, 2025

Event cameras generate asynchronous and sparse event streams capturing changes in light intensity. They offer significant advantages over conventional frame-based cameras, such as a higher dynamic range and an extremely faster data rate, making them particularly useful in scenarios involving fast motion or challenging lighting conditions. Spiking neural networks (SNNs) share similar asynchronous and sparse characteristics and are well-suited for processing data from event cameras. Inspired by the potential of transformers and spike-driven transformers (spikeformers) in other computer vision tasks, we propose two solutions for fast and robust optical flow estimation for event cameras: STTFlowNet and SDformerFlow. STTFlowNet adopts a U-shaped artificial neural network (ANN) architecture with spatiotemporal shifted window self-attention (swin) transformer encoders, while SDformerFlow presents its fully spiking counterpart, incorporating swin spikeformer encoders. Furthermore, we present two variants of the spiking version with different neuron models. Our work is the first to make use of spikeformers for dense optical flow estimation. We conduct end-to-end training for all models using supervised learning. Our results yield state-of-the-art performance among SNN-based event optical flow methods on both the DSEC and MVSEC datasets, and show significant reduction in power consumption compared to the equivalent ANNs.

Recommended citation: Y. Tian and J. Andrade-Cetto. SDformer-Flow: Spiking Neural Network Transformer for Event-based optical flow estimation, 2024, arXiv preprint arXiv:2409.04082. https://arxiv.org/abs/2409.04082

talks

Spiking neural network for event camera ego-motion estimation Permalink

Published:

I was an invited speaker at NFM22 to give a talk about my research on SNN for event camera egomotion and optical flow.

Event-based robot vision Permalink

Published:

I gave a talk at IRI summer school 2024 about our researches using event camera for motion estimation and SLAM.