Spiking Neural Network Transformer for Event-based optical flow estimation

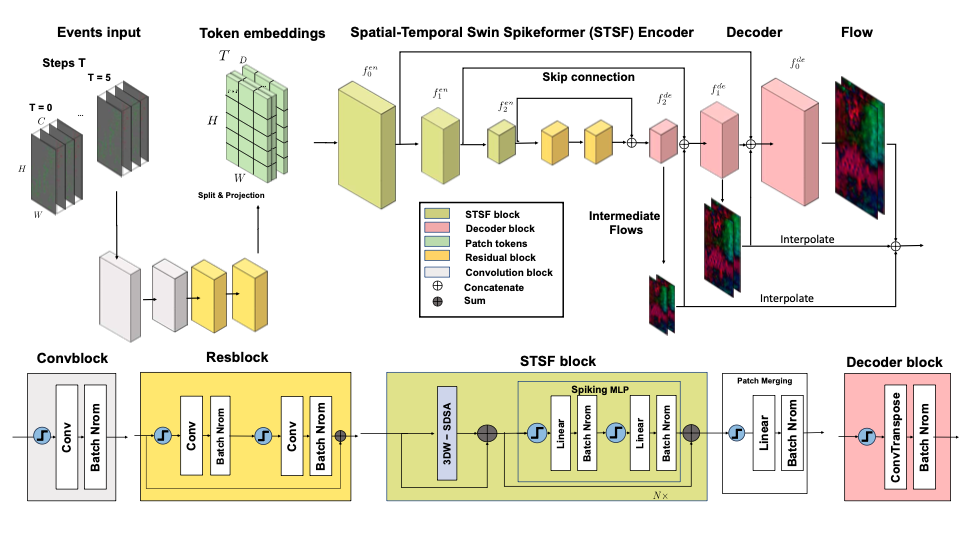

I developed SDformerFlow, the first fully spiking transformer-based architecture for optical flow estimation using event cameras. This work was part of my research on combining event cameras with spiking neural networks (SNNs) for efficient motion estimation. I designed and implemented two solutions: STTFlowNet, a U-shaped artificial neural network (ANN) architecture with spatiotemporal shifted window self-attention (swin) transformer encoders, and SDformerFlow, its fully spiking counterpart incorporating swin spikeformer encoders. I also developed two variants of the spiking version with different neuron models. This work represents the first use of spikeformers for dense optical flow estimation. I conducted end-to-end training for all models using supervised learning, achieving state-of-the-art performance among SNN-based event optical flow methods on both the DSEC and MVSEC datasets, with significant reduction in power consumption compared to equivalent ANNs.