Egomotion from event-based SNN optical flow

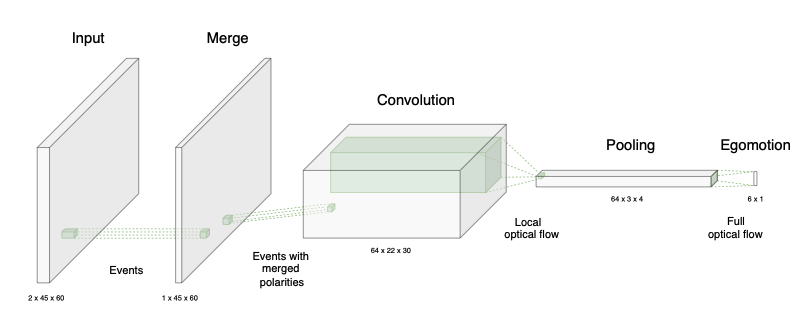

I developed a method for computing egomotion using event cameras with a pre-trained optical flow spiking neural network (SNN). To address the aperture problem encountered in the sparse and noisy normal flow of the initial SNN layers, I designed and implemented a sliding-window bin-based pooling layer that computes a fused full flow estimate. To add robustness to noisy flow estimates, instead of computing the egomotion from vector averages, I developed an optimization approach based on the intersection of constraints. I also integrated a RANSAC step to robustly deal with outlier flow estimates in the pooling layer. I validated the approach on both simulated and real scenes and achieved results that compare favorably to state-of-the-art methods. The method may be sensitive to datasets and motion speeds different from those used for training, which is a limitation I identified during the evaluation.