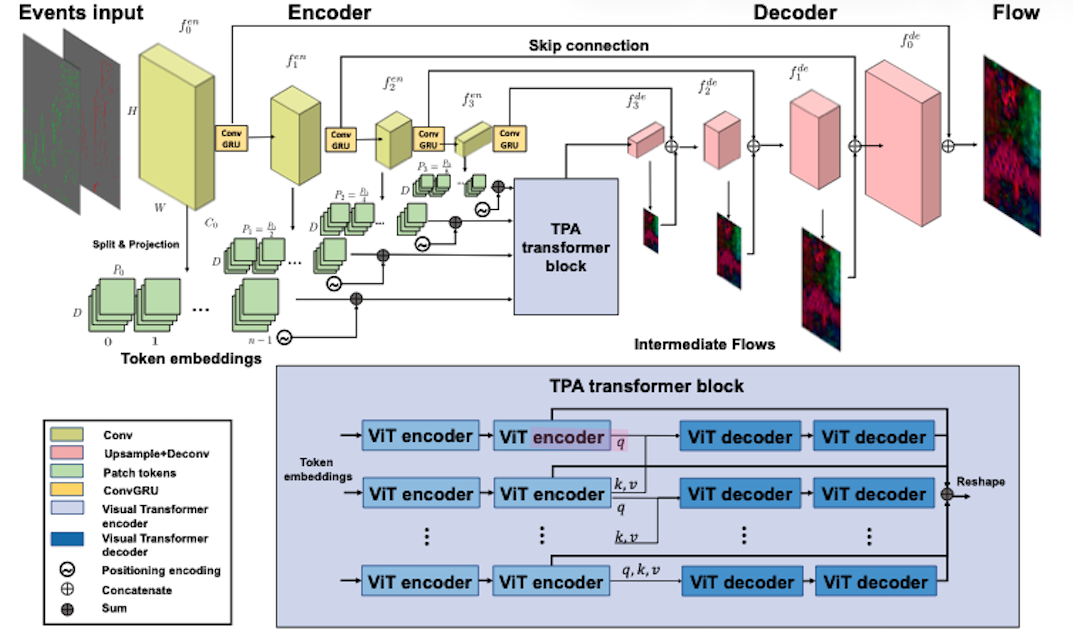

Event transformer FlowNet for optical flow estimation

In this project, I developed ET-FlowNet, a hybrid RNN-ViT architecture for optical flow estimation using event cameras. This work represents the first time that transformers have been incorporated into event-based optical flow tasks. Event cameras are bioinspired sensors that produce asynchronous and sparse streams of events, offering advantages such as fast motion detection with low latency, high dynamic range, and low power consumption. I designed this architecture to address the need for fast and robust computation of optical flow for robotics applications. Visual transformers (ViTs) are ideal candidates for learning global context in visual tasks, and rigid body motion is a prime case for the use of ViTs since long-range dependencies in the image hold during rigid body motion. I performed end-to-end training using a self-supervised learning method. My results show comparable and in some cases exceeding performance with state-of-the-art coarse-to-fine event-based optical flow estimation.