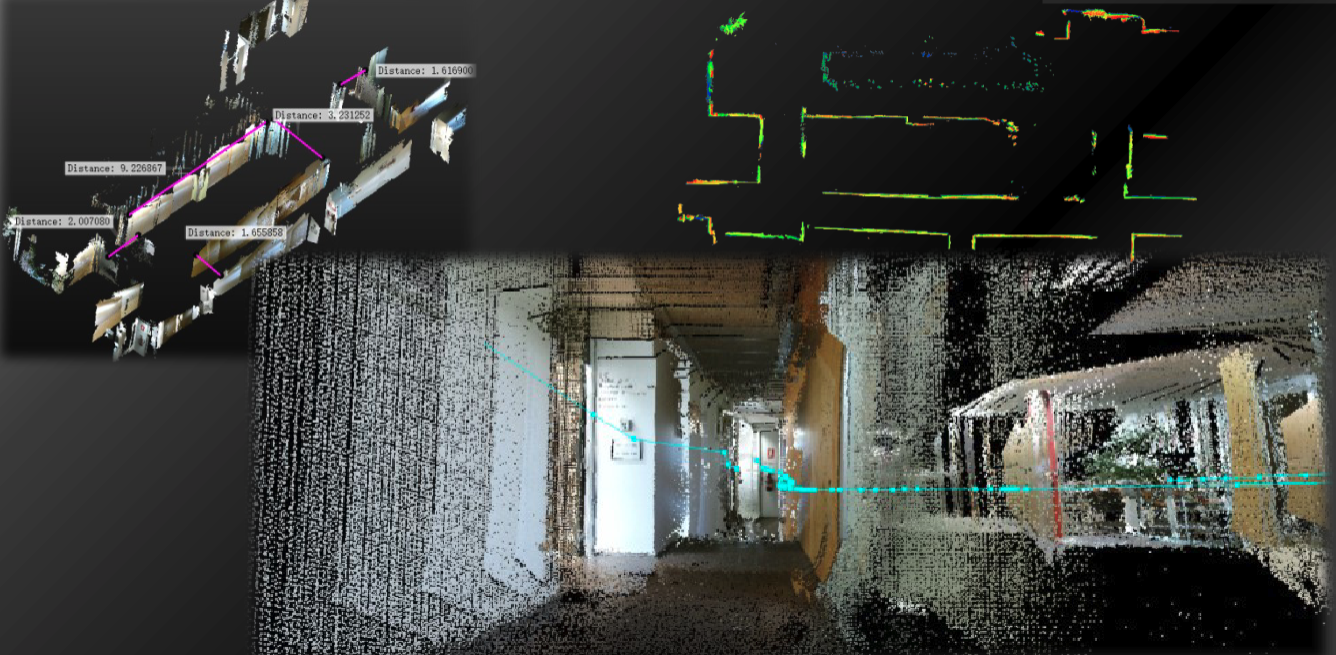

Real-time Human Action Recognition

Developed a human action recognition method based on 3D skeleton for the Temi assistive robot. Created an Android app that performs human pose estimation, recognizes basic human actions, and records the time spent for each action in real-time.